C# - Phi-4-multimodal 모델의 GPU 가속 방법 (ORT 사용)

지난 글에서 실습한 "Phi-4-multimodal" 모델을 실행할 때 좀 이상한 게 있었습니다.

C# / Foundry Local - Phi-4-multimodal 모델을 사용하는 방법

; https://www.sysnet.pe.kr/2/0/13957

아무리 그래도 RTX 4060 Ti에서조차 (

109 초 정도가 걸릴 정도로) 답변이 너무 느렸다는 점인데요, 게다가 그런 상황에서 Task Manager의 성능 탭에 보이는 GPU 사용량이 거의 변화가 없고 오히려 CPU 사용량이 증가하는 것을 보여줍니다.

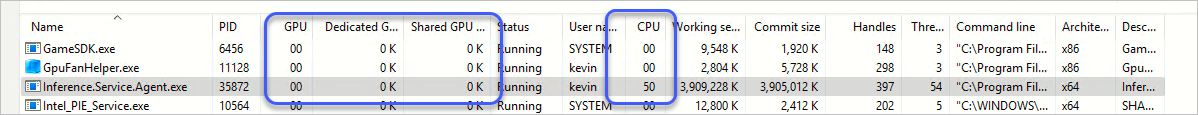

위의 그림에서처럼, Inference.Service.Agent.exe가 CPU 사용량을 (16 Core 시스템에서) 60% 이상 차지하고, GPU/Dedicated GPU memory/Shared GPU memory 모두 수치가 0으로 나옵니다. 실제로 응답 시간이 너무 느려서 OpenAIClientOptions를 통해 NetworkTimeout을 (기본값 1분 40초에서) 늘려야만 했습니다.

OpenAIClientOptions options = new OpenAIClientOptions();

options.NetworkTimeout = TimeSpan.FromMinutes(30);

만약 부하가 심한, 이미지 추론 등의 작업을 하는 경우에는 기본 timeout 값을 넘기는 바람에 이런 오류까지 발생합니다.

Unhandled exception. System.AggregateException: Retry failed after 4 tries. (The operation was cancelled because it exceeded the configured timeout of 0:01:40. ) (The operation was cancelled because it exceeded the configured timeout of 0:01:40. ) (The operation was cancelled because it exceeded the configured timeout of 0:01:40. ) (The operation was cancelled because it exceeded the configured timeout of 0:01:40. )

---> System.Threading.Tasks.TaskCanceledException: The operation was cancelled because it exceeded the configured timeout of 0:01:40.

---> System.Threading.Tasks.TaskCanceledException: The operation was canceled.

---> System.Threading.Tasks.TaskCanceledException: The operation was canceled.

---> System.IO.IOException: Unable to read data from the transport connection: An established connection was aborted by the software in your host machine..

---> System.Net.Sockets.SocketException (10053): An established connection was aborted by the software in your host machine.

at System.Net.Sockets.NetworkStream.Read(Span`1 buffer)

--- End of inner exception stack trace ---

at System.Net.Sockets.NetworkStream.Read(Span`1 buffer)

at System.Net.Http.HttpConnection.InitialFillAsync(Boolean async)

at System.Net.Http.HttpConnection.SendAsync(HttpRequestMessage request, Boolean async, CancellationToken cancellationToken)

--- End of inner exception stack trace ---

at System.Net.Http.HttpConnection.SendAsync(HttpRequestMessage request, Boolean async, CancellationToken cancellationToken)

at System.Net.Http.HttpConnectionPool.SendWithVersionDetectionAndRetryAsync(HttpRequestMessage request, Boolean async, Boolean doRequestAuth, CancellationToken cancellationToken)

at System.Net.Http.HttpMessageHandlerStage.Send(HttpRequestMessage request, CancellationToken cancellationToken)

at System.Net.Http.DiagnosticsHandler.SendAsync(HttpRequestMessage request, Boolean async, CancellationToken cancellationToken)

at System.Net.Http.HttpMessageHandlerStage.Send(HttpRequestMessage request, CancellationToken cancellationToken)

at System.Net.Http.Metrics.MetricsHandler.SendAsync(HttpRequestMessage request, Boolean async, CancellationToken cancellationToken)

at System.Net.Http.HttpMessageHandlerStage.Send(HttpRequestMessage request, CancellationToken cancellationToken)

at System.Net.Http.SocketsHttpHandler.Send(HttpRequestMessage request, CancellationToken cancellationToken)

at System.Net.Http.HttpClient.Send(HttpRequestMessage request, HttpCompletionOption completionOption, CancellationToken cancellationToken)

--- End of inner exception stack trace ---

at System.Net.Http.HttpClient.HandleFailure(Exception e, Boolean telemetryStarted, HttpResponseMessage response, CancellationTokenSource cts, CancellationToken cancellationToken, CancellationTokenSource pendingRequestsCts)

at System.Net.Http.HttpClient.Send(HttpRequestMessage request, HttpCompletionOption completionOption, CancellationToken cancellationToken)

at System.ClientModel.Primitives.HttpClientPipelineTransport.ProcessSyncOrAsync(PipelineMessage message, Boolean async)

at System.ClientModel.Internal.TaskExtensions.EnsureCompleted(ValueTask task)

at System.ClientModel.Primitives.HttpClientPipelineTransport.ProcessCore(PipelineMessage message)

at System.ClientModel.Primitives.PipelineTransport.ProcessSyncOrAsync(PipelineMessage message, Boolean async)

...[생략]...

하지만 우리가 다운로드한 모델은 분명히 "gpu" 버전인데... 왜일까요? Foundry Local의 문서를 보면,

Foundry Local architecture - Hardware abstraction layer

; https://learn.microsoft.com/en-us/azure/ai-foundry/foundry-local/concepts/foundry-local-architecture#hardware-abstraction-layer

The hardware abstraction layer ensures that Foundry Local can run on various devices by abstracting the underlying hardware. To optimize performance based on the available hardware, Foundry Local supports:

multiple execution providers, such as NVIDIA CUDA, AMD, Qualcomm, Intel.

multiple device types, such as CPU, GPU, NPU.

CUDA를 포함한 다양한 Execution Provider를 지원한다고 합니다. 실제로 지난 글에 테스트한 "phi-3-mini-4k"를 사용했을 때는 Inference.Service.Agent.exe 프로세스가 GPU 자원을 소비하는 것을 확인할 수 있습니다. (아마도

foundry model list로 제공되는 것들은 아무런 조치를 하지 않아도 GPU 가속이 되는 것 같습니다.)

(혹시, Foundry Local 환경에서 "Phi-4-multimodal-instruct" 모델을 GPU 가속으로 사용하는 방법을 아시는 분은 덧글 부탁드립니다. ^^)

재미있는 건,

"Phi-4-multimodal-instruct" repo에는 CUDA 가속을 사용하는 phi4-mm.py 스크립트 사용법이 나온다는 점입니다.

// WSL + Ubuntu 24.04 환경에서 테스트

# Install the CUDA package of ONNX Runtime GenAI

pip install --pre onnxruntime-genai-cuda

# Please adjust the model directory (-m) accordingly

curl https://raw.githubusercontent.com/microsoft/onnxruntime-genai/main/examples/python/phi4-mm.py -o phi4-mm.py

python phi4-mm.py -m gpu/gpu-int4-rtn-block-32 -e cuda

의외로 onnxruntime_genai 패키지를 사용하다 보니 생각보다 간단한 구조를 갖고 있는데요,

# Copyright (c) Microsoft Corporation. All rights reserved.

# Licensed under the MIT License

import argparse

import os

import glob

import time

from pathlib import Path

import onnxruntime_genai as og

# og.set_log_options(enabled=True, model_input_values=True, model_output_values=True)

def _find_dir_contains_sub_dir(current_dir: Path, target_dir_name):

curr_path = Path(current_dir).absolute()

target_dir = glob.glob(target_dir_name, root_dir=curr_path)

if target_dir:

return Path(curr_path / target_dir[0]).absolute()

else:

if curr_path.parent == curr_path:

# Root dir

return None

return _find_dir_contains_sub_dir(curr_path / '..', target_dir_name)

def _complete(text, state):

return (glob.glob(text + "*") + [None])[state]

def get_paths(modality, user_provided_paths, default_paths, interactive):

paths = None

if interactive:

try:

import readline

readline.set_completer_delims(" \t\n;")

readline.parse_and_bind("tab: complete")

readline.set_completer(_complete)

except ImportError:

# Not available on some platforms. Ignore it.

pass

paths = [

path.strip()

for path in input(

f"{modality.capitalize()} Path (comma separated; leave empty if no {modality}): "

).split(",")

]

else:

paths = user_provided_paths if user_provided_paths else default_paths

paths = [path for path in paths if path]

return paths

def run(args: argparse.Namespace):

print("Loading model...")

config = og.Config(args.model_path)

if args.execution_provider != "follow_config":

config.clear_providers()

if args.execution_provider != "cpu":

print(f"Setting model to {args.execution_provider}...")

config.append_provider(args.execution_provider)

model = og.Model(config)

print("Model loaded")

processor = model.create_multimodal_processor()

tokenizer_stream = processor.create_stream()

interactive = not args.non_interactive

while True:

image_paths = get_paths(

modality="image",

user_provided_paths=args.image_paths,

default_paths=[str(_find_dir_contains_sub_dir(Path(__file__).parent, "test") / "test_models" / "images" / "australia.jpg")],

interactive=interactive

)

audio_paths = get_paths(

modality="audio",

user_provided_paths=args.audio_paths,

default_paths=[str(_find_dir_contains_sub_dir(Path(__file__).parent, "test") / "test_models" / "audios" / "1272-141231-0002.mp3")],

interactive=interactive

)

images = None

audios = None

prompt = "<|user|>\n"

# Get images

if len(image_paths) == 0:

print("No image provided")

else:

for i, image_path in enumerate(image_paths):

if not os.path.exists(image_path):

raise FileNotFoundError(f"Image file not found: {image_path}")

print(f"Using image: {image_path}")

prompt += f"<|image_{i+1}|>\n"

images = og.Images.open(*image_paths)

# Get audios

if len(audio_paths) == 0:

print("No audio provided")

else:

for i, audio_path in enumerate(audio_paths):

if not os.path.exists(audio_path):

raise FileNotFoundError(f"Audio file not found: {audio_path}")

print(f"Using audio: {audio_path}")

prompt += f"<|audio_{i+1}|>\n"

audios = og.Audios.open(*audio_paths)

if interactive:

text = input("Prompt: ")

else:

if args.prompt:

text = args.prompt

else:

text = "Does the audio summarize what is shown in the image? If not, what is different?"

prompt += f"{text}<|end|>\n<|assistant|>\n"

print("Processing inputs...")

inputs = processor(prompt, images=images, audios=audios)

print("Processor complete.")

print("Generating response...")

params = og.GeneratorParams(model)

params.set_inputs(inputs)

params.set_search_options(max_length=7680)

generator = og.Generator(model, params)

start_time = time.time()

while not generator.is_done():

generator.generate_next_token()

new_token = generator.get_next_tokens()[0]

print(tokenizer_stream.decode(new_token), end="", flush=True)

print()

total_run_time = time.time() - start_time

print(f"Total Time : {total_run_time:.2f}")

for _ in range(3):

print()

# Delete the generator to free the captured graph before creating another one

del generator

if not interactive:

break

if __name__ == "__main__":

parser = argparse.ArgumentParser()

parser.add_argument(

"-m", "--model_path", type=str, required=True, help="Path to the folder containing the model"

)

parser.add_argument(

"-e", "--execution_provider", type=str, required=False, default='follow_config', choices=["cpu", "cuda", "dml", "follow_config"], help="Execution provider to run the ONNX Runtime session with. Defaults to follow_config that uses the execution provider listed in the genai_config.json instead."

)

parser.add_argument(

"--image_paths", nargs='*', type=str, required=False, help="Path to the images, mainly for CI usage"

)

parser.add_argument(

"--audio_paths", nargs='*', type=str, required=False, help="Path to the audios, mainly for CI usage"

)

parser.add_argument(

'-pr', '--prompt', required=False, help='Input prompts to generate tokens from, mainly for CI usage'

)

parser.add_argument(

'--non-interactive', action=argparse.BooleanOptionalAction, required=False, help='Non-interactive mode, mainly for CI usage'

)

args = parser.parse_args()

run(args)

onnxruntime_genai.Config 인스턴스를 통해 cuda를 사용하라고 설정한 다음 onnxruntime_genai.Model을 생성하고 있습니다. 오호~~~ 명시적으로 Execution Provider를 선택할 수 있는 옵션이 있군요. ^^ 게다가 다음과 같은 함수 호출로 현재 제공되는 Execution Provider도 확인이 가능합니다.

import onnxruntime as ort

print(ort.get_device())

print(ort.get_available_providers())

'''

실행 결과:

GPU

['TensorrtExecutionProvider', 'CUDAExecutionProvider', 'CPUExecutionProvider']

'''

그렇다면, foundry local에서도 저런 식으로 (자동이 아닌) 수작업으로 직접 Execution Provider를 설정하는 기능이 있다면 좋겠는데 아직은 (Preview 버전이니만큼) 그런 배려는 없는 듯합니다.

어쨌든, ORT(ONNX Runtime) GenAI는 파이썬과 함께 C# API도 유사한 수준으로 제공하는데요,

Generate API (Preview) - Python API

; https://onnxruntime.ai/docs/genai/api/python.html

ONNX Runtime generate() C# API

; https://onnxruntime.ai/docs/genai/api/csharp.html

// 그러고 보면, OpenAI API의 OpenAI.Chat.ChatClient도 Execution Provider를 선택하는 기능은 없는 것 같습니다.

// 엄밀히 클라이언트/서버 구조의 OpenAI API 방식에서는 엔진을 실제로 구동하는 서버 측에 Execution Provider를 제공하는 기능이 있는 것이 맞을 것입니다.

// 그런 의미에서 Foundry Local 자체에 Execution Provider 옵션이 제공돼야 하는 것이고.

당연히 (파이썬 예제에서 그랬던 것처럼) Execution Provider를 지정하는 것도 가능하지만, 이와 함께 참조해야 할 패키지가 "Microsoft.ML.OnnxRuntimeGenAI"가 아닌 "Microsoft.ML.OnnxRuntimeGenAI.CUDA"여야 한다는 점이 다릅니다. ^^

using Microsoft.ML.OnnxRuntimeGenAI;

internal class Program

{

// 1. 사전 환경 구성: Cuda Toolkit과 cuDNN 라이브러리를 설치

// 2. 패키지를 CUDA 버전으로 교체

// Install-Package Microsoft.ML.OnnxRuntimeGenAI.CUDA

private static void Main(string[] args)

{

string modelPath = @"C:\temp\models\Phi-4-multimodal-instruct-onnx";

Console.Write("Loading model from " + modelPath + "...");

Config onnxConfig = new Config(modelPath);

onnxConfig.AppendProvider("cuda");

using Model model = new(onnxConfig);

Console.Write("Done\n");

using Tokenizer tokenizer = new(model);

using TokenizerStream tokenizerStream = tokenizer.CreateStream();

while (true)

{

Console.Write("User:");

string prompt = "<|im_start|>user\n" +

Console.ReadLine() +

"<|im_end|>\n<|im_start|>assistant\n";

var sequences = tokenizer.Encode(prompt);

using GeneratorParams gParams = new GeneratorParams(model);

gParams.SetSearchOption("max_length", 200);

using Generator generator = new(model, gParams);

generator.AppendTokenSequences(sequences);

Console.Out.Write("\nAI:");

while (!generator.IsDone())

{

generator.GenerateNextToken();

var token = generator.GetSequence(0)[^1];

Console.Out.Write(tokenizerStream.Decode(token));

Console.Out.Flush();

}

Console.WriteLine();

}

}

}

실행해 보면 GPU를 사용하므로, (모델 로딩 시간 제외하고) 약 2.6초 정도에 결과가 나옵니다. (109초에 비하면 정말 빠릅니다. ^^)

(

첨부 파일은 이 글의 예제 코드를 포함합니다.)

참고로, 위의 예제를 실행할 때 다음과 같은 오류가 발생한다면?

Loading model from C:\temp\models\Phi-4-multimodal-instruct-onnx...Unhandled exception. Microsoft.ML.OnnxRuntimeGenAI.OnnxRuntimeGenAIException: CUDA execution provider is not enabled in this build.

at Microsoft.ML.OnnxRuntimeGenAI.Model..ctor(Config config)

at Program.Main(String[] args) in C:\temp\ConsoleApp1\Program.cs:line 15

Microsoft.ML.OnnxRuntimeGenAI.OnnxRuntimeGenAIException: 'CUDA execution provider is not enabled in this build.' #1191

; https://github.com/microsoft/onnxruntime-genai/issues/1191

"Microsoft.ML.OnnxRuntimeGenAI.CUDA" 패키지가 아닌 "Microsoft.ML.OnnxRuntimeGenAI" 패키지를 참조한 상태에서 "cuda" Provider를 활성화하려고 시도했기 때문입니다.

또는, 이런 오류가 발생한다면?

Unhandled exception. Microsoft.ML.OnnxRuntimeGenAI.OnnxRuntimeGenAIException: D:\a\_work\1\s\onnxruntime\core\session\provider_bridge_ort.cc:1778 onnxruntime::ProviderLibrary::Get [ONNXRuntimeError] : 1 : FAIL : Error loading "C:\temp\ConsoleApp1\bin\Debug\net8.0\runtimes\win-x64\native\onnxruntime_providers_cuda.dll" which depends on "cudnn64_9.dll" which is missing. (Error 126: "The specified module could not be found.")

at Microsoft.ML.OnnxRuntimeGenAI.Model..ctor(Config config)

at Program.Main(String[] args) in C:\temp\ConsoleApp1\Program.cs:line 15

NVIDIA cuDNN 라이브러리를 설치했는지 확인해 보시고,

NVIDIA cuDNN

; https://developer.nvidia.com/cudnn

설치했다면 로컬에 대충 다음과 같은 경로로 cudnn DLL 파일이 위치할 텐데요,

C:\> dir /a/s cudnn64_9.dll

...[생략]...

Directory of C:\Program Files\NVIDIA\CUDNN\v9.10\bin\11.8

2025-05-14 오전 09:25 266,288 cudnn64_9.dll

1 File(s) 266,288 bytes

Directory of C:\Program Files\NVIDIA\CUDNN\v9.10\bin\12.9

2025-05-14 오전 09:43 265,760 cudnn64_9.dll

1 File(s) 265,760 bytes

...[생략]...

Model 초기화 전에 적절한 버전의 것으로 PATH 환경 변수에 연결해 주시면 됩니다.

private static void Main(string[] args)

{

// https://developer.nvidia.com/cudnn

string? path = Environment.GetEnvironmentVariable("PATH");

path += @";C:\Program Files\NVIDIA\CUDNN\v9.10\bin\12.9";

Environment.SetEnvironmentVariable("PATH", path);

// ...[생략]...

}

Windows의 WSL 환경에서 phi4-mm.py 스크립트 실행 시 이런 오류가 발생한다면?

$ python phi4-mm.py -m /mnt/c/foundry_cache/models/Phi-4-multimodal-instruct-onnx -e cuda

Loading model...

Setting model to cuda...

Traceback (most recent call last):

File "/mnt/c/temp/phi4-mm.py", line 174, in <module>

run(args)

File "/mnt/c/temp/phi4-mm.py", line 63, in run

model = og.Model(config)

RuntimeError: /onnxruntime_src/onnxruntime/core/session/provider_bridge_ort.cc:1778 onnxruntime::Provider& onnxruntime::ProviderLibrary::Get() [ONNXRuntimeError] : 1 : FAIL : Failed to load library libonnxruntime_providers_cuda.so with error: libcublasLt.so.12: cannot open shared object file: No such file or directory

WSL 환경에서 CUDA를 위한 환경 구성을 하지 않았기 때문입니다. 따라서 아래의 글에 따라,

Windows + WSL2 환경에서 (tensorflow 등의) NVIDIA GPU 인식

; https://www.sysnet.pe.kr/2/0/13937#install_cuda

필요한 구성 요소를 설치한 후,

$ wget https://developer.download.nvidia.com/compute/cuda/repos/wsl-ubuntu/x86_64/cuda-wsl-ubuntu.pin

$ sudo mv cuda-wsl-ubuntu.pin /etc/apt/preferences.d/cuda-repository-pin-600

$ wget https://developer.download.nvidia.com/compute/cuda/12.9.0/local_installers/cuda-repo-wsl-ubuntu-12-9-local_12.9.0-1_amd64.deb

$ sudo dpkg -i cuda-repo-wsl-ubuntu-12-9-local_12.9.0-1_amd64.deb

$ sudo cp /var/cuda-repo-wsl-ubuntu-12-9-local/cuda-*-keyring.gpg /usr/share/keyrings/

$ sudo apt-get update

$ sudo apt-get -y install cuda-toolkit-12-9

$ ls -l /home/testusr/miniconda3/envs/huggingface-build/lib/python3.10/site-packages/onnxruntime/capi/libonnxruntime_providers_cuda.so

-rwxr-xr-x 1 testusr testusr 407530104 Jun 18 08:34 /home/testusr/miniconda3/envs/huggingface-build/lib/python3.10/site-packages/onnxruntime/capi/libonnxruntime_providers_cuda.so

다시 실행해 보면, 이번엔 오류 메시지가 바뀌어 여전히 문제가 발생할 것입니다.

$ python phi4-mm.py -m /mnt/c/foundry_cache/models/Phi-4-multimodal-instruct-onnx -e cuda

Loading model...

Setting model to cuda...

Traceback (most recent call last):

File "/mnt/c/temp/phi4-mm.py", line 174, in <module>

run(args)

File "/mnt/c/temp/phi4-mm.py", line 63, in run

model = og.Model(config)

RuntimeError: /onnxruntime_src/onnxruntime/core/session/provider_bridge_ort.cc:1778 onnxruntime::Provider& onnxruntime::ProviderLibrary::Get() [ONNXRuntimeError] : 1 : FAIL : Failed to load library libonnxruntime_providers_cuda.so with error: libcudnn.so.9: cannot open shared object file: No such file or directory

그런데, 이상하군요, 분명히 libcudnn.so.9 파일이 있는데도,

$ ls -l /home/testusr/miniconda3/envs/huggingface-build/lib/python3.10/site-packages/nvidia/cudnn/lib/libcudnn.so.9

-rw-r--r-- 1 testusr testusr 125136 Jun 18 08:34 /home/testusr/miniconda3/envs/huggingface-build/lib/python3.10/site-packages/nvidia/cudnn/lib/libcudnn.so.9

$ ldd /home/testusr/miniconda3/envs/huggingface-build/lib/python3.10/site-packages/onnxruntime/capi/libonnxruntime_providers_cuda.so

linux-vdso.so.1 (0x00007ffe7817e000)

libcublasLt.so.12 => /usr/local/cuda/targets/x86_64-linux/lib/libcublasLt.so.12 (0x00007f14d7000000)

libcublas.so.12 => /usr/local/cuda/targets/x86_64-linux/lib/libcublas.so.12 (0x00007f14d0800000)

libcurand.so.10 => /usr/local/cuda/targets/x86_64-linux/lib/libcurand.so.10 (0x00007f14c6000000)

libcufft.so.11 => /usr/local/cuda/targets/x86_64-linux/lib/libcufft.so.11 (0x00007f14b4200000)

libcudart.so.12 => /usr/local/cuda/targets/x86_64-linux/lib/libcudart.so.12 (0x00007f14b3e00000)

libcudnn.so.9 => not found

libdl.so.2 => /lib/x86_64-linux-gnu/libdl.so.2 (0x00007f1509133000)

librt.so.1 => /lib/x86_64-linux-gnu/librt.so.1 (0x00007f150912e000)

libnvrtc.so.12 => /usr/local/cuda/targets/x86_64-linux/lib/libnvrtc.so.12 (0x00007f14ad536000)

libpthread.so.0 => /lib/x86_64-linux-gnu/libpthread.so.0 (0x00007f1509129000)

libstdc++.so.6 => /lib/x86_64-linux-gnu/libstdc++.so.6 (0x00007f14ad2b8000)

libm.so.6 => /lib/x86_64-linux-gnu/libm.so.6 (0x00007f14d6f17000)

libgcc_s.so.1 => /lib/x86_64-linux-gnu/libgcc_s.so.1 (0x00007f15090f9000)

libc.so.6 => /lib/x86_64-linux-gnu/libc.so.6 (0x00007f14ad0a6000)

/lib64/ld-linux-x86-64.so.2 (0x00007f15217d3000)

연결이 안 되고 있는 것입니다. 검색해 보면 이와 관련한 이슈가 있는데요,

sam 2 install failed "libcudnn.so.9: cannot open shared object file: No such file or directory" but libcudnn.so.9 is installed #112

; https://github.com/facebookresearch/sam2/issues/112

덧글의 의견에서 LD_LIBRARY_PATH 또는 LD_PRELOAD 환경 변수를 연결해 보라고 하는데, 제 경우에는 LD_PRELOAD를 적용해 주니 해결됐습니다.

// 해결이 안 됨

// export LD_LIBRARY_PATH=./miniconda3/envs/huggingface-build/lib/python3.10/site-packages/nvidia/cudnn/lib:${LD_LIBRARY_PATH}

// OK

$ export LD_PRELOAD=/home/testusr/miniconda3/envs/huggingface-build/lib/python3.10/site-packages/nvidia/cudnn/lib/libcudnn.so.9

$ ldd /home/testusr/miniconda3/envs/huggingface-build/lib/python3.10/site-packages/onnxruntime/capi/libonnxruntime_providers_cuda.so

linux-vdso.so.1 (0x00007ffee7b25000)

/home/testusr/miniconda3/envs/huggingface-build/lib/python3.10/site-packages/nvidia/cudnn/lib/libcudnn.so.9 (0x00007fc40b600000)

...[생략]...

만약 이렇게 오류가 발생한다면?

$ python phi4-mm.py -m /mnt/c/foundry_cache/models/Phi-4-multimodal-instruct-onnx -e cuda

Loading model...

Setting model to cuda...

Model loaded

Traceback (most recent call last):

File "/home/testusr/miniconda3/envs/huggingface-build/lib/python3.10/pathlib.py", line 625, in __str__

return self._str

AttributeError: 'PosixPath' object has no attribute '_str'

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/mnt/c/temp/phi4-mm.py", line 174, in <module>

run(args)

File "/mnt/c/temp/phi4-mm.py", line 75, in run

default_paths=[str(_find_dir_contains_sub_dir(Path(__file__).parent, "test") / "test_models" / "images" / "australia.jpg")],

File "/mnt/c/temp/phi4-mm.py", line 23, in _find_dir_contains_sub_dir

return _find_dir_contains_sub_dir(curr_path / '..', target_dir_name)

File "/mnt/c/temp/phi4-mm.py", line 23, in _find_dir_contains_sub_dir

return _find_dir_contains_sub_dir(curr_path / '..', target_dir_name)

File "/mnt/c/temp/phi4-mm.py", line 23, in _find_dir_contains_sub_dir

return _find_dir_contains_sub_dir(curr_path / '..', target_dir_name)

[Previous line repeated 986 more times]

File "/mnt/c/temp/phi4-mm.py", line 16, in _find_dir_contains_sub_dir

target_dir = glob.glob(target_dir_name, root_dir=curr_path)

File "/home/testusr/miniconda3/envs/huggingface-build/lib/python3.10/glob.py", line 24, in glob

return list(iglob(pathname, root_dir=root_dir, dir_fd=dir_fd, recursive=recursive))

File "/home/testusr/miniconda3/envs/huggingface-build/lib/python3.10/glob.py", line 40, in iglob

root_dir = os.fspath(root_dir)

File "/home/testusr/miniconda3/envs/huggingface-build/lib/python3.10/pathlib.py", line 632, in __fspath__

return str(self)

File "/home/testusr/miniconda3/envs/huggingface-build/lib/python3.10/pathlib.py", line 627, in __str__

self._str = self._format_parsed_parts(self._drv, self._root,

File "/home/testusr/miniconda3/envs/huggingface-build/lib/python3.10/pathlib.py", line 611, in _format_parsed_parts

return drv + root + cls._flavour.join(parts[1:])

RecursionError: maximum recursion depth exceeded while calling a Python object

"python phi4-mm.py" 명령어를 실행하는 디렉터리를 기준으로 상위에 "test"라는 빈 디렉터리를 하나 만들어 두면 됩니다. 가령 위의 명령어를 "/mnt/c/temp"에서 실행했다면 "/mnt/c/test" 디렉터리를 만들어야 합니다. 굳이 저렇게 만들 이유가 없었을 것 같은데, phi4-mm.py은 test 디렉터리를 발견할 때까지 계속해서 ".." 경로를 추가하면서 무한 반복합니다.

[이 글에 대해서 여러분들과 의견을 공유하고 싶습니다. 틀리거나 미흡한 부분 또는 의문 사항이 있으시면 언제든 댓글 남겨주십시오.]